Input tensor (Inference Overlay): Difference between revisions

No edit summary |

No edit summary |

||

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

An Input tensor is a multi-dimensional data array that serves as input for [[Neural Network]]s. Generally these input tensors are filled with (parts of) one or more images, of a given width and height and with one more more color channels. These images will be processed by the neural network to classify the image or detect features in the image. | An Input tensor is a multi-dimensional data array that serves as input for [[Neural Network]]s. Generally these input tensors are filled with (parts of) one or more images, of a given width and height and with one more more color channels. These images will be processed by the neural network to classify the image or detect features in the image. | ||

In the {{software}}, also [[Grid Overlay]]s, often [[Satellite Overlay]]s or [[WMS Overlay]]s, can serve as input for neural networks. How an input tensor of a neural network is filled by an Inference Overlay is configured using Tensor Links. | In the {{software}}, also [[Grid Overlay]]s, often [[Satellite Overlay]]s or [[WMS Overlay]]s, can serve as input for neural networks. How an input tensor of a neural network is filled by an [[Inference Overlay]] is configured using Tensor Links. | ||

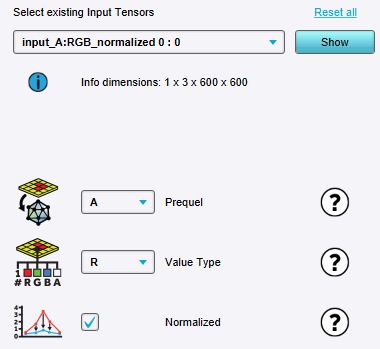

[[File:inference_overlay_wizard_tensor_link.jpg|thumb|right|An input tensor link in the Inference Overlay Wizard.]] | |||

A tensor link references: | A tensor link references: | ||

* The input tensor, identified by the n (images) and c (channel) tuple, which are mentioned in the name of the tensor link. | * The input tensor, identified by the n (images) and c (channel) tuple, which are mentioned in the name of the tensor link, as well as the ''width'' and ''height'' of the input tensor. | ||

* The prequel of an Inference Overlay that should be used to obtain values from | * The [[Prequels (Inference Overlay)|''prequel'']] of an Inference Overlay that should be used to obtain values from | ||

* | * The ''value type'' of the data of the prequel; | ||

** When it is a color, you can specify which color channel should be used; '''R'''ed, '''G'''reen, '''B'''lue or '''A'''lpha | ** When it is a color, you can specify which color channel should be used; '''R'''ed, '''G'''reen, '''B'''lue or '''A'''lpha | ||

** When it is a floating point value, simple specify '''DEFAULT'''. | ** When it is a floating point value, simple specify '''DEFAULT'''. | ||

* Whether the value should be normalized; Color channels are always normalized between 0 and 255. Floating point values are normalized using the calculated min- and max-value of the specified input [[prequel]] overlay. | * Whether the value should be ''normalized''; Color channels are always normalized between 0 and 255. Floating point values are normalized using the calculated min- and max-value of the specified input [[prequel]] overlay. | ||

Since the input tensor has a limited width and height, the Inference Overlay model uses the algorithm that moves an input window of this width and height over the prequel grid. Since features can be situated on the edges, the step by which the input tensor is moved can be configured using the [[Stride fraction (Inference Overlay)|Stride fraction]] model attribute. | |||

{{article end | {{article end | ||

|notes=*The inference overlay wizard will report an issue when a prequel is referenced by a tensor link and it is not yet specified for the Inference Overlay. | |notes=*The inference overlay wizard will report an issue when a prequel is referenced by a tensor link and it is not yet specified for the Inference Overlay. | ||

* We may decide in the near future to obtain the expected normalization min and max values from the metadata of a [[Neural Network]], as this is more robust. | |||

|seealso=*[[A prequel (Inference Overlay)]] | |||

*[[B prequel (Inference Overlay)]] | |||

}} | }} | ||

{{InferenceOverlay nav}} | {{InferenceOverlay nav}} | ||

Latest revision as of 11:44, 19 December 2024

An Input tensor is a multi-dimensional data array that serves as input for Neural Networks. Generally these input tensors are filled with (parts of) one or more images, of a given width and height and with one more more color channels. These images will be processed by the neural network to classify the image or detect features in the image.

In the Tygron Platform, also Grid Overlays, often Satellite Overlays or WMS Overlays, can serve as input for neural networks. How an input tensor of a neural network is filled by an Inference Overlay is configured using Tensor Links.

A tensor link references:

- The input tensor, identified by the n (images) and c (channel) tuple, which are mentioned in the name of the tensor link, as well as the width and height of the input tensor.

- The prequel of an Inference Overlay that should be used to obtain values from

- The value type of the data of the prequel;

- When it is a color, you can specify which color channel should be used; Red, Green, Blue or Alpha

- When it is a floating point value, simple specify DEFAULT.

- Whether the value should be normalized; Color channels are always normalized between 0 and 255. Floating point values are normalized using the calculated min- and max-value of the specified input prequel overlay.

Since the input tensor has a limited width and height, the Inference Overlay model uses the algorithm that moves an input window of this width and height over the prequel grid. Since features can be situated on the edges, the step by which the input tensor is moved can be configured using the Stride fraction model attribute.

Notes

- The inference overlay wizard will report an issue when a prequel is referenced by a tensor link and it is not yet specified for the Inference Overlay.

- We may decide in the near future to obtain the expected normalization min and max values from the metadata of a Neural Network, as this is more robust.