Output tensor (Inference Overlay): Difference between revisions

Jump to navigation

Jump to search

No edit summary |

No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

An Output tensor is a multi-dimensional data array that serves as output for [[Neural Network]]s. Generally these output tensors are results of the operations applied by the convolution neural network on the input tensors. Depending on the type of neural network, multiple type of output tensors can be generated. | An Output tensor is a multi-dimensional data array that serves as output for [[Neural Network]]s. Generally these output tensors are results of the operations applied by the convolution neural network on the [[Input tensor (Inference Overlay)|input tensors]]. Depending on the type of neural network, multiple type of output tensors can be generated. | ||

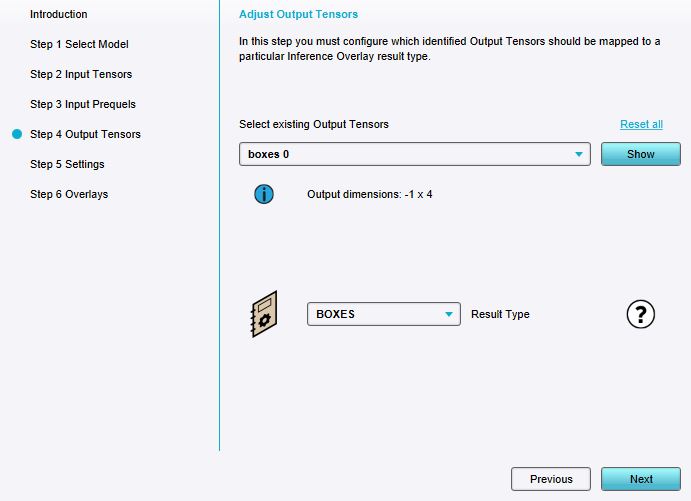

[[File:inference_overlay_wizard_tensor_link_ouput.jpg|thumb|right|An output tensor link in the Inference Overlay Wizard.]] | [[File:inference_overlay_wizard_tensor_link_ouput.jpg|thumb|right|An output tensor link in the Inference Overlay Wizard.]] | ||

| Line 9: | Line 9: | ||

{{article end | {{article end | ||

|notes=*The inference overlay wizard will report an issue when a | |notes=*The inference overlay wizard will report an issue when a result type is referenced by a tensor link and it is not yet add to the Inference Overlay. | ||

}} | }} | ||

{{InferenceOverlay nav}} | {{InferenceOverlay nav}} | ||

Latest revision as of 10:19, 9 October 2024

An Output tensor is a multi-dimensional data array that serves as output for Neural Networks. Generally these output tensors are results of the operations applied by the convolution neural network on the input tensors. Depending on the type of neural network, multiple type of output tensors can be generated.

In the Tygron Platform, the following output tensors are supported:

Notes

- The inference overlay wizard will report an issue when a result type is referenced by a tensor link and it is not yet add to the Inference Overlay.