How to train your own AI model for an Inference Overlay

Jump to navigation

Jump to search

To train your own Mask R-CNN[1] AI model for an Inference Overlay in the Tygron Platform, you will need datasets to train on.

To export these datasets using your projects in the Tygron Platform, follow the How to export AI Training Data.

Tygron AI Training

A Tygron AI Suite[2] is available at github. This repository contains the necessary files to configure a Conda environment to train a new Mask R-CNN AI model.

Conda-forge's Miniforge[3] will be used to manage a Conda environments and run Jupyter Notebooks with python.

Example notebooks[4] provided in the repository can be used as a basis to train your own model.

TygronAI yml-file[5] provided in the repository can be used initialize a Conda Environment. It includes Pytorch and Jupyter Notebook.

How to train your own AI model for an Inference Overlay:

- If you are not familiar with github, you can download the zip containing all the files that you need. Otherwise, clone the git repository

- Optionally unzip and open the folder containing the downloaded files; the local repository. Copy the path to this directory.

- Download[3] and install Miniforge.

- Open the Miniforge Prompt application.

- Change the directory to the path of the local repository. For example

cd user/git/tygronai/

This directory should contain the tygronai.yml file. - Create and initialize a new conda envirmonment with the tygronai.yml file using:

conda env create -n tygronai -f tygronai.yml

and press enter to confirm. - Wait for the downloads and unpacking to complete.

- Activate the create tygronai environment using:

conda activate tygronai

The name (tygronai) should now have appeared in place of (base) in front of the prompt. - Start the Jupyter Notebook application by typing:

jupyter notebook

- A browser will open with the Jupyter Notebook application.

- The application should open in the tygron ai repository folder. If not, browse to the folder of the tygron-ai-suite repository.

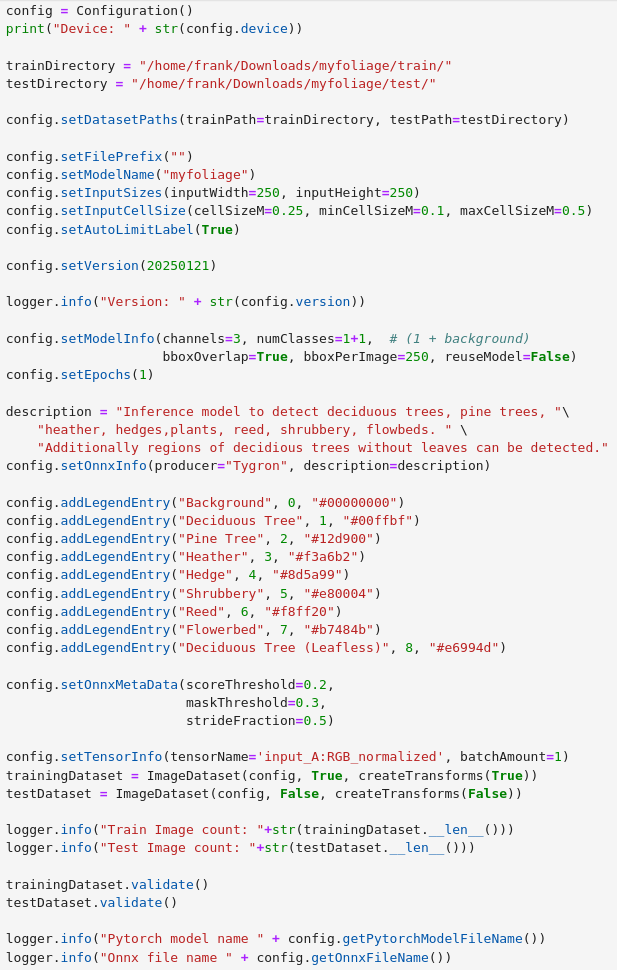

- Once in the correct folder, select the "example_config.ipynb".

- Adjust the following parameters:

trainDirectory = "PATH TO TRAIN FILES"

testDirectory = "PATH TO TEST FILES"

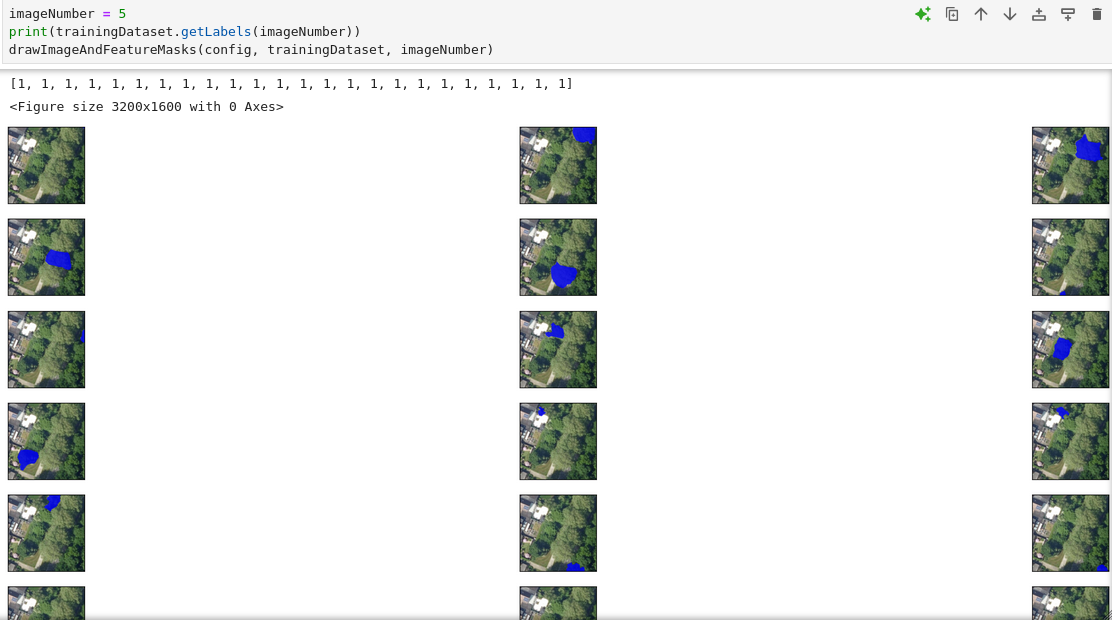

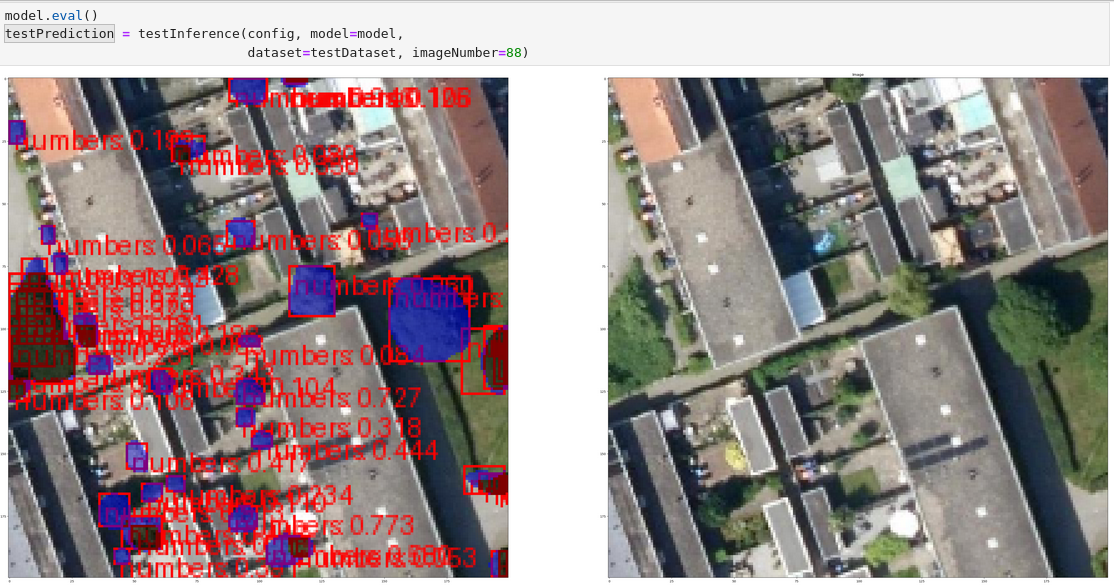

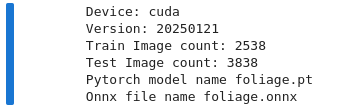

to the folders containing the exported datasets. See how to export AI datasets for more information. - Press the double arrow button named Restart kernel and execute all cells to run the Jupyter Notebook. See the images below of what to expect.

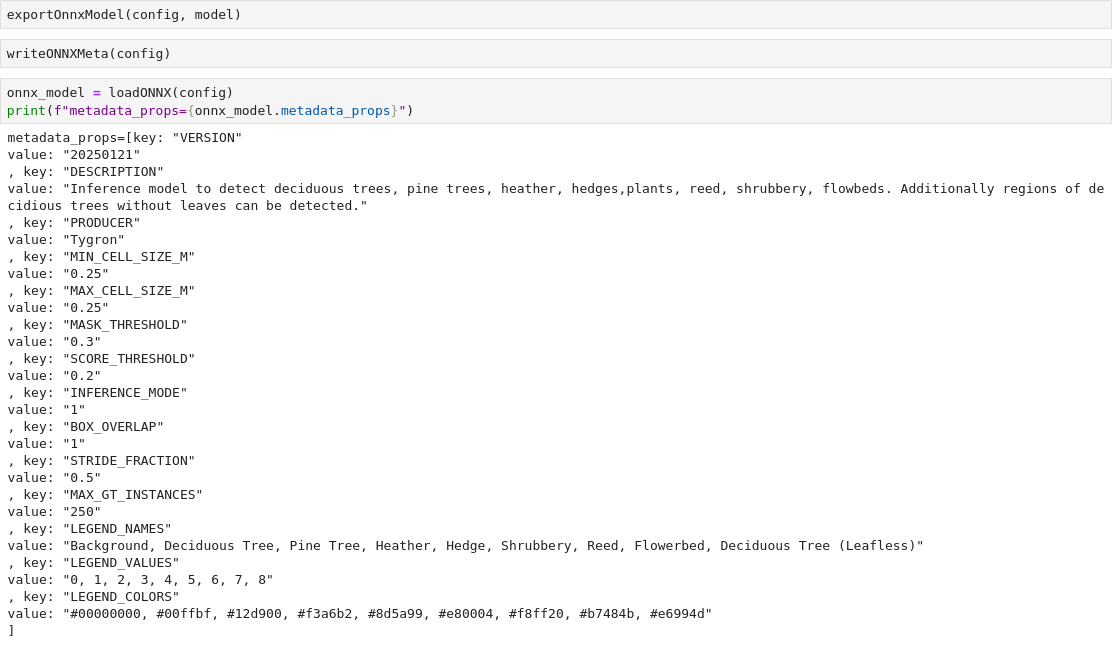

- Eventually an ONNX file will be created.

Notes

- The train sets can obtain data files from sub-folders. For example, if data was exported to user/documents/foliage/train/demo_training_data, the PATH TO TRAIN FILES can be user/documents/foliage/train/. This makes it easier to train on data exported from multiple projects and combining it with your own custom data.

- Always inspect whether the images of the test and train datasets are use the same overlay, for example that both are Satellite Overlay images.

- Once an ONNX file is created, open the folder containing this file and drag this file into the Tygron Client Application to automatically import it with an Inference Overlay.

- In the step with the Configuration of the example_config notebook, cuda should be printed. If not, cpu will be printed. Training a model on your cpu is significantly slower. You might need to open the command line tool on your computer and run the command nvidia-smi to find out what version of cuda is supported on your gpu.

- To train a better model, increase the number of trained epochs, for example to 20:

config.setEpochs(20)

On the other side, training with too many epochs may not improve the model; it will become too strict.

How-to's

- How to export AI Training Data

- How to import an ONNX file using drag and drop

- How to import an ONNX file for an Inference Overlay

- How to adjust a Neural Networks metadata

See also

References

- ↑ Mask R-CNN ∙ Found at: https://pytorch.org/vision/main/models/mask_rcnn.html ∙ (last visited: 2025-10-13)

- ↑ Tygron AI Suite ∙ Found at: https://github.com/Tygron/tygron-ai-suite ∙ (last visited: 2025-10-13)

- ↑ 3.0 3.1 Miniforge ∙ Found at: https://conda-forge.org/download/ ∙ (last visited: 2025-10-13)

- ↑ Example Config Jupyter Notebook of the Tygron AI Suite ∙ Found at: https://raw.githubusercontent.com/Tygron/tygron-ai-suite/refs/heads/main/example_config.ipynb ∙ (last visited: 2025-10-13)

- ↑ Conda environment YMLfile ∙ Found at: https://raw.githubusercontent.com/Tygron/tygron-ai-suite/refs/heads/main/tygronai.yml ∙ (last visited: 2025-10-13)