Neural Network (Inference Overlay): Difference between revisions

No edit summary |

No edit summary |

||

| (29 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

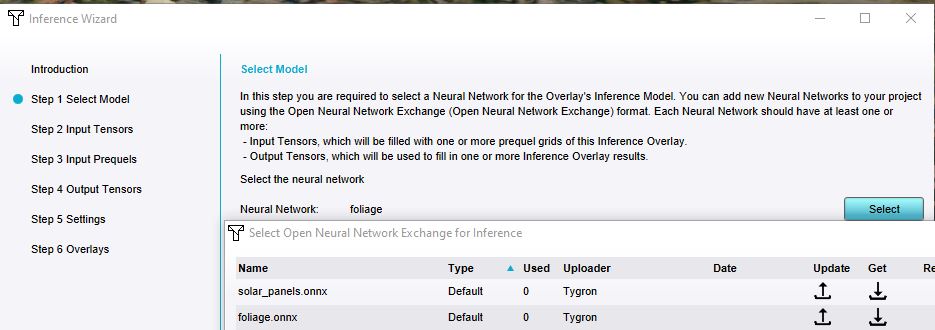

{{:Neural | [[File:inference_overlay_neural_network.jpg|thumb|right|Selecting a Neural Network in the [[Inference Overlay]] Wizard]] | ||

A Neural Network in the {{software}} is a pre-trained convolution network{{ref|Cheatsheet}} that can be used by an [[Inference Overlay|AI Inference Overlay]] to classify or detect features given one or more input [[Overlay]]s. | |||

Neural Networks are stored in the {{software}} as data [[item]]s with a reference to an [[ONNX]]-file (Open Neural Network Exchange format{{ref|ONNX}}) visible via Netron{{ref|Netron}}. | |||

[[Input tensor (Inference Overlay)|Input]] and [[Output tensor (Inference Overlay)|output]] for neural networks is handled using data tensors. These tensors are multi-dimensional data arrays. They are automatically identified when selecting or adding a new Neural Network. | |||

Whether a Neural Network classifies or detects objects given an input depends on its inference model. Such a model consists using AI-software, such as [[PyTorch]]. Neural Networks can indicate what type of network they are by defining the [[Inference mode (Inference Overlay)|INFERENCE_MODE]] attribute in their metadata. | |||

===Supported Convolution Types=== | |||

# Image Classification | |||

#* Classifies a picture using labels, in combination with a predicted probability per label | |||

# Detection (with masks and bounding boxes) | |||

#* Detects up to several features in a picture | |||

#* Predicts probabilities of features and where they are located | |||

===Parameters in Metadata=== | |||

Neural Networks can also store default parameters for Inference Overlay, such that they are setup more properly once set for an Inference Overlay. The following parameters are used: | |||

* Producer and version | |||

* Description | |||

* Preferred min and max [[Grid cell size]] (m). | |||

* [[Model attributes (Inference Overlay)|Inference Overlay attributes]]. | |||

* [[Inference Overlay]]'s legend [[Labels result type (Inference Overlay)|labels]], with corresponding value and color (in hex-format). | |||

* Maximum amount of detectable features per inference window. | |||

{{article end | |||

|seealso= | |||

* [[Inference Overlay]] | |||

* [[ONNX]] | |||

* [[PyTorch]] | |||

* [[Demo Training Data Project]] | |||

|notes= | |||

* Neural networks that are not referenced by an AI Inference Overlay may be removed from your project when a project is saved. | |||

|howtos= | |||

* [[How to export AI Training Data]] | |||

* [[How to train your own AI model for an Inference Overlay]] | |||

* [[How to import an ONNX file using drag and drop]] | |||

* [[How to import an ONNX file for an Inference Overlay]] | |||

* [[How to adjust a Neural Networks metadata]] | |||

|references= | |||

<references> | |||

{{ref|ONNX | |||

|name=ONNX | |||

|author= | |||

|page= | |||

|source= | |||

|link=https://onnx.ai/ | |||

|lastvisit=2024-09-21 | |||

}} | |||

{{ref|Cheatsheet | |||

|name=Cheatsheet | |||

|author= | |||

|page= | |||

|source= | |||

|link=https://stanford.edu/~shervine/teaching/cs-230/cheatsheet-convolutional-neural-networks | |||

|lastvisit=2024-09-21 | |||

}} | |||

{{ref|Netron | |||

|name=Netron | |||

|author= | |||

|page= | |||

|source= | |||

|link=https://netron.app/ | |||

|lastvisit=2024-10-14 | |||

}} | |||

</references> | |||

}} | |||

{{InferenceOverlay nav}} | {{InferenceOverlay nav}} | ||

Latest revision as of 14:01, 17 October 2025

A Neural Network in the Tygron Platform is a pre-trained convolution network[1] that can be used by an AI Inference Overlay to classify or detect features given one or more input Overlays. Neural Networks are stored in the Tygron Platform as data items with a reference to an ONNX-file (Open Neural Network Exchange format[2]) visible via Netron[3].

Input and output for neural networks is handled using data tensors. These tensors are multi-dimensional data arrays. They are automatically identified when selecting or adding a new Neural Network.

Whether a Neural Network classifies or detects objects given an input depends on its inference model. Such a model consists using AI-software, such as PyTorch. Neural Networks can indicate what type of network they are by defining the INFERENCE_MODE attribute in their metadata.

Supported Convolution Types

- Image Classification

- Classifies a picture using labels, in combination with a predicted probability per label

- Detection (with masks and bounding boxes)

- Detects up to several features in a picture

- Predicts probabilities of features and where they are located

Parameters in Metadata

Neural Networks can also store default parameters for Inference Overlay, such that they are setup more properly once set for an Inference Overlay. The following parameters are used:

- Producer and version

- Description

- Preferred min and max Grid cell size (m).

- Inference Overlay attributes.

- Inference Overlay's legend labels, with corresponding value and color (in hex-format).

- Maximum amount of detectable features per inference window.

Notes

- Neural networks that are not referenced by an AI Inference Overlay may be removed from your project when a project is saved.

How-to's

- How to export AI Training Data

- How to train your own AI model for an Inference Overlay

- How to import an ONNX file using drag and drop

- How to import an ONNX file for an Inference Overlay

- How to adjust a Neural Networks metadata

See also

References

- ↑ Cheatsheet ∙ Found at: https://stanford.edu/~shervine/teaching/cs-230/cheatsheet-convolutional-neural-networks ∙ (last visited: 2024-09-21)

- ↑ ONNX ∙ Found at: https://onnx.ai/ ∙ (last visited: 2024-09-21)

- ↑ Netron ∙ Found at: https://netron.app/ ∙ (last visited: 2024-10-14)